In May 2024, Google unveiled new voice and video search capabilities for Google Lens at the I/O event. Now available in Search Labs on Android and iOS, these features enable users to ask questions directly by long-pressing the screen. Utilizing a custom Gemini model, the video search functionality provides AI-generated responses based on captured content, making Google Lens more intuitive and useful in everyday situations. Below, we shall talk more on Google Lens new features in further detail.

Table of Contents

Google Lens new features:

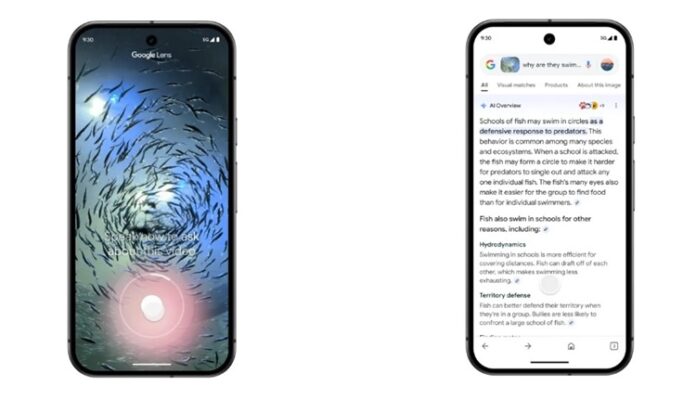

The new video search feature in Google Lens allows users to search for information by capturing video. When you point your phone at something and press the shutter button, Lens starts recording. You can then ask questions about what you’re seeing. For instance, if you’re at an aquarium and see fish swimming together, you can ask, “Why are they swimming together?” Google Lens will respond using its AI technology.

Currently, the voice search feature only supports English queries, but Google plans to expand this in the future. The AI Overview will show relevant search results based on your questions and the video content.

What does Google’s Vice President say?

Rajan Patel, Google’s vice president of engineering, explained that the video is processed as a series of image frames. Google Lens uses the same computer vision techniques as before but with improvements. The custom Gemini model understands multiple frames in sequence and gathers information from the web to provide accurate responses.

Conclusion:

This update makes Google Lens more useful by allowing you to ask questions about moving objects and scenes. To use this feature, you need to join the “AI Overviews and more” experiment in Search Labs. Overall, these enhancements build on existing technologies, offering a valuable tool for users looking to learn more about their surroundings.